Gesche Huebner, Michael Fell and Nicole Watson

Abstract

Energy use is of crucial importance for the global challenge of climate change, and also is an essential part of daily life. Hence, research on energy needs to be robust and valid. Other scientific disciplines have experienced a reproducibility crisis, i.e. existing findings could not be reproduced in new studies. The ‘TReQ’ approach is recommended to improve research practices in the energy field and arrive at greater transparency, reproducibility and quality. A highly adaptable suite of tools is presented that can be applied to energy research approaches across this multidisciplinary and fast-changing field. In particular, the following tools are introduced – preregistration of studies, making data and code publicly available, using preprints, and employing reporting guidelines – to heighten the standard of research practices within the energy field. The wider adoption of these tools can facilitate greater trust in the findings of research used to inform evidence-based policy and practice in the energy field.

Practice relevance

Concrete suggestions are provided for how and when to use preregistration, open data and code, preprints, and reporting guidelines, offering practical guidance for energy researchers for improving the TReQ of their research. The paper shows how employing tools around these concepts at appropriate stages of the research process can assure end-users of the research that good practices were followed. This will not only increase trust in research findings but also can deliver other co-benefits for researchers, e.g. more efficient processes and a more collaborative and open research culture. Increased TReQ can help remove barriers to accessing research both within and outside of academia, improving the visibility and impact of research findings. Finally, a checklist is presented that can be added to publications to show how the tools were used.

1. Introduction

Energy use is key to global challenges such as climate change, and it plays an important role in our daily lives. But how sure can one be that research findings in this area can be trusted? This paper argues that the limited employment of principles and tools to support greater openness could be making this hard to assess. It offers a practical guide to the tools and principles that energy researchers could use to achieve high-standard research practices with regard to openness.

‘Research practices’ is a broad term. Many universities and funding bodies have issued guidance on good research practices (e.g. MRC n.d., University of Cambridge n.d.), which covers, for example, openness, supervision, training, intellectual property, use of data and equipment, publications of research results, and ethical practice. This paper adopts a narrower definition of good research practices, namely research practices related to transparency, reproducibility and quality (TReQ). This is similar to Hardwicke et al.’s (2020) discussion of ‘reproducibility-related research practices’, but takes a broader view as, for reasons outlined in the paper, a strict notion of reproducibility is not applicable to all research in the energy field.

Increasingly, researchers are advocating openness and transparency as an essential component of good research (Munafò et al. 2017). Unless sufficient details of studies are shared, it can be hard for other researchers to tell if the conclusions are justified, check the reproducibility of the findings and undertake effective synthesis of the evidence. However, it is not self-evident which aspects of studies need to be shared. A range of tools and practices have been developed by the scientific community to guide researchers on what to share, and when (e.g. the Open Science Framework—OSF). These include guidelines on which details of studies to report (e.g. Equator Network 2016), preregistration of theory-testing work (Chambers et al. 2014), and the sharing of data and code (Van den Eynden et al. 2009; Wilkinson et al. 2016). Some disciplines—such as medicine and psychology—have been at the forefront of encouraging the adoption of these tools, following the acknowledgement of failures to replicate findings from scientific studies (e.g. Anvari & Lakens 2018; Begley & Ellis 2012). In energy research, however, they remain largely a niche concern (Huebner et al. 2017). Consequently, for reasons outlined here, evidence-based policy and practice may be built on shaky foundations.

‘Energy research’ is difficult to define, given that it does not have a clear disciplinary home; research on energy can be driven from a physics, medicine or social science perspective. Here, inspiration for how to define ‘energy research’ has been drawn from its description in the journal Energy Research & Social Science (n.d.). Energy research is taken to cover:

a range of topics revolving around the intersection of energy technologies, fuels, and resources on one side; and social processes and influences—including communities of energy users, people affected by energy production, social institutions, customs, traditions, behaviors, and policies—on the other.

This definition encapsulates not only explicitly social science or sociotechnical research (Love & Cooper 2015) but also research projects such as building monitoring or modelling work requiring the acknowledgement of human behaviour (e.g. Mavrogianni et al. 2014; AECOM 2012). It excludes, however, more fundamental research fields, such as thermodynamics.

The remainder of the paper is structured as follows. An introduction describes the general benefits of these principles in the specific context of energy research. Transparency, reproducibility and quality (TReQ) are then defined. Four tools aimed at improving openness are presented in detail, namely preregistration of studies/analysis, use of reporting guidelines, sharing of data and analysis code, and publishing preprints. Practical suggestions on tool implementation are provided, including a checklist. The discussion considers the limitations of the stated approach and other potential tools which can improve the transparency, reproducibility (where appropriate) and quality of research across the field.

1.1 Defining TREQ

Transparency has a range of meanings (Ball 2009). The definition from Moravcsik (2014: 48) is used here, i.e. ‘the principle that every […] scientist should make the essential components of his or her work visible to fellow scholars’. Transparency is at the core of the approach because it enables informed interpretation and synthesis of the findings, as well as supporting reproducibility and general research quality (Miguel et al. 2014). Literature that explicitly considers ‘research quality’ uses a wide variety of terms to describe its main features, ranging from specific methodological points such as selecting appropriate sample sizes (UKCCIS 2015) to general perceptions of legitimacy by end users (Belcher et al. 2016). The National Center for Dissemination of Disability (2005) lists the frequently mentioned points for assessing research quality, which, amongst others, includes posing important questions, using appropriate methods, assessing bias and considering alternative explanations for findings. Improving openness in research could contribute towards enhancing quality by promoting a deeper consideration of the choices made throughout the research process, in the knowledge that these will have to be described and justified. For example, composing a pre-analysis plan (PAP) obliges a researcher to set out and justify data-collection choices early in the process, creating the opportunity for omissions to be spotted and rectified in time.

Different scientific disciplines define reproducibility and replicability differently – and at times contradictorily (National Academies of Sciences, Engineering, and Medicine 2019). However, there is general consensus that one term characterises cases whereby new data are collected following the approach of an existing study, and the other characterises cases whereby the original authors’ data and code are used to repeat an existing analysis. Barba (2018) presents groups of definitions of reproducibility and replicability by discipline. However, energy research—given it is not a discipline as such—does not fit into these groups. Given that there is no obvious reason to choose a definition based on discipline, here reproducibility is taken to mean that independent studies testing the same thing should obtain broadly the same results (Munafò et al. 2017), for studies where there is the assumption that the findings hold beyond the original sample tested. This definition was used by the journal Nature (2016) when it asked scientists about issues around this topic. The current paper is more concerned with reproducibility, defined as just stated, than replicability, given that it is a broader concept.

1.2 General benefits of TREQ

Some disciplines are finding themselves in a reproducibility crisis, an ongoing scientific crisis that indicates that the results of many scientific studies cannot be reproduced. When 100 studies from three high-ranking psychology journals were repeated, only 36% of the repeats had significant findings, compared with 97% of the original studies, with a mean effect size of about half that of the original studies (Open Science Collaboration 2015). Preclinical research also showed a spectacularly low rate of successful reproduction of earlier studies (Prinz et al. 2011; Begley & Ellis 2012). In a survey, 70% of approximately 1500 scientists indicated that they have tried and failed to reproduce another scientist’s experiments, and more than half had failed to reproduce their own experiments (Baker & Penny 2016). There was no explicit category for energy research. However, energy research likely falls within the categories of engineering, earth and environment, and ‘other’, for all of which at least 60% of respondents indicated a failure to reproduce another scientist’s experiment. This is a problem if policy and research are being built on effects which are either not real or do not apply in the context in which they are being deployed.

Outright data fraud does happen, with one estimate of about 1.5% of all research being fraudulent (Wells & Titus 2006). Overall, fraudulent practice seems relatively rare, although the evidence of its prevalence is in itself subject to definitional issues and considered unreliable (George 2018). To the authors’ knowledge, there has been no systematic evaluation of discipline-specific differences in prevalence of data fraud; however, Stroebe et al. (2012) showed that psychology is no more vulnerable to fraud than the biomedical sciences in a convenience sample of fraud cases. The TReQ approach discourages data fraud or makes it easier to detect. More importantly, it can deter so-called questionable research practices (QRP), or design, analytic, or reporting practices that have been questioned because of the potential for the practice to be employed with the purpose of presenting biased evidence in favour of an assertion. (Banks et al. 2016a: 3)

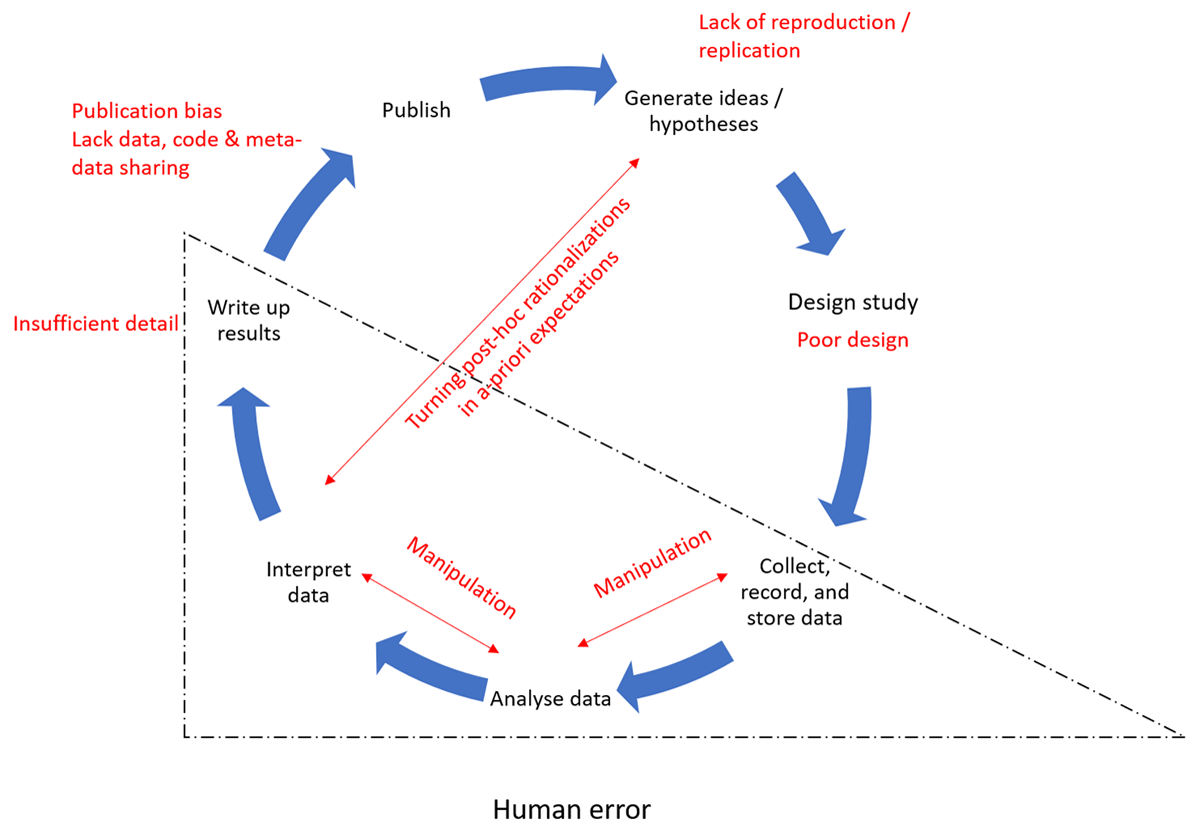

As Figure 1 illustrates, pure reproducibility studies are rare as they are often viewed as having a lower status than novel discoveries (Park 2004). Poor study design can encompass a wide range of issues, including missing important covariates or potentially biasing factors, or an increased likelihood of false-positives or false-negatives (Button et al. 2013). While Figure 1 applies most directly to challenges in quantitative research, many of the issues it highlights (such as poor design, selectivity in the collection, analysis and interpretation of data, and insufficient detail in reporting) apply equally to qualitative approaches (DuBois et al. 2018; Haven & Van Grootel 2019). For example, a study that did not report details of how interview participants were recruited could (intentionally or unintentionally) obscure any bias this process may have introduced.

‘Manipulation’ is a generic term that stands for changing, analysing and reporting data in ways that support outcomes deemed favourable by the researcher. It is important to stress that QRPs can be employed quite innocently without any intent to mislead, for instance, through human error (Figure 1, dot-and-dash triangle). Regardless of intent, the impact is to mislead nonetheless. Both qualitative and quantitative work are open to the manipulation of data collection or analysis to produce interesting or ‘significant’ findings. Examples of manipulation in quantitative work include only reporting part of the results, rounding down p-values and p-hacking. The latter refers to the practice of taking arbitrary decisions with the data in order to achieve results below the typical significance threshold of p < 0.05 (Banks et al. 2016b; Simmons et al. 2011). The impact of p-hacking is to overstate the strength or even existence of associations or effects. In qualitative work, data manipulation could take the form of selectively reporting quotations or perspectives to support a prior expectation (Haven & van Grootel 2019; Moravcsik 2014).

One study shows that more than 70% of respondents indicated they had published work not reporting all dependent measures (John et al. 2012). Taking liberal decisions around p-values was reported by 11% of researchers and turning post-hoc explanations into a priori expectations by about 50% (Banks et al. 2016a).

Another form of manipulation is known as HARKing, or ‘hypothesising after results are known’. For example, a researcher may find a significant association between two variables and then write up the study as if they anticipated the association. This portrays an exploratory study as a confirmatory one (Kerr 1998). Again, this can be prompted by a perception that journals favour studies with significant effects compared with those that report null results. For example, a study showed that for medicine trials that were completed but not published, researchers gave as the major reasons for non-publication ‘negative’ results and lack of interest (Dickersin et al. 1987). This ‘publication bias’ creates a skewed representation of the true findings in the published literature. Withholding data, code, metadata or detail on important characteristics of studies makes it harder to spot such practices, and less likely that high-quality replication studies can be carried out.

The use of tools to increase TReQ also has wider advantages around the accessibility of research. In a 2017 study, based on a random sample of 100,000 journals with Crossref DOIs, 72% of these articles were estimated to be closed access, i.e. not freely accessible under a recognised license or on the publisher’s page (Piwowar et al. 2018). The inaccessibility of research reports has a general slowing and discriminatory effect on the progress of research (Suber 2013; Eysenbach 2006); articles behind a paywall are cited less (Tennant et al. 2016) and, hence, likely will shape future research to lesser extent. Open access to research findings and data, on the other hand, can support a range of social and economic benefits such as enabling citizen science initiatives and reducing costs for companies using scientific research to inform their innovation (Tennant et al. 2016; Fell 2019). Funders are increasingly mandating open-access publishing. However, routes for open access include depositing the manuscript in a repository where it is embargoed for a certain period; hence, only accessible after a delay (e.g. UKRI n.d.). Tools focused on TReQ contribute to making science openly available.

1.3 TREQ in energy research

Whilst more transparent, reproducible and high-quality research is important in any area, it is especially pressing in the applied area of energy research. Energy is ubiquitous in people’s lives, including for heating and cooling homes, for food production and preparation, and for transportation. Furthermore, climate change is a critical problem. As decarbonisation of the energy sector forms a key part of national and international strategies for reducing carbon emissions (e.g. European Commission n.d.; HM Government 2017), research in this field can be directly relevant to climate change mitigation or adaptation.

In order to inform policy, evidence needs to be of high quality and reliable. Despite its crucial importance, energy research remains behind other disciplines when it comes to best research practices (Huebner et al. 2017; Pfenninger et al. 2018). An examination of 15 years of energy research found that almost one-third of more than 4000 examined studies examined had no research design or method (Sovacool 2014), which means that anyone who wanted to reproduce them would struggle, given the lack of necessary information.

Several reasons may explain why. Energy research is highly multidisciplinary and uses a multitude of methods (e.g. interviews, focus groups, surveys, field and laboratory experiments, case studies, monitoring and modelling). This diversity of academic background and methods makes it harder to design good-practice guidance applicable to the majority of researchers, and for researchers to judge the quality of work outside their discipline (Eisner 2018; Schloss 2018). In a more homogenous field, agreement on best practices is likely easier to be found.

Much energy research is focused on the current situation with a strong emphasis on contextual factors, fully aware that in 10 years things will change. For example, the prevalence of electric cars is growing rapidly, with an increase of 63% between 2017 and 2018 (IEA n.d.). Hence, it is likely that factors that predict the purchase of an electric car in 2017 are quite different to those in 2018.

There are several research areas within energy where reproducibility, in a strict sense, might not even be an appropriate term to discuss. Many qualitative and participatory research projects focus on specific case studies, and even very similar work conducted in different contexts would expect different results. For this reason, consideration of the reproducibility crisis (and appropriate responses to it) are likely to be less salient. However, even for those studies, openness and transparency are important concepts so that others can make an informed judgement about the quality of the conducted research.

A final practical factor that makes it harder to reproduce previous research is that (especially) field trials are extremely time- and money-consuming. The set-up and running of a field trial related to energy usage in social housing was about £2 million out of a £3.3 million project (D. Shipworth, personal communication, 2020; Ofgem n.d.).

Despite all these challenges, energy researchers can be doing much more to integrate TReQ research practices into their work.

In the remainder of this paper, a set of simple approaches is presented which almost all energy researchers should now consider employing.

2. The TREQ approach

From the suite of approaches that support TReQ research, this paper focuses on four: study preregistration, reporting guidelines, preprints and code/data-sharing. The criteria for deciding which ones to focus on were that they should all be:

- applicable to the wide multidisciplinary variety of research approaches employed in energy

- flexible in terms of how they can be employed; thus, researchers can use them in ways that they find most useful rather than feeling constrained by them

- low barrier to entry; they are easy to pick up and require little specialist knowledge (at least in basic applications)

2.1 Preregistration of analysis plans

Preregistration involves describing how researchers plan to undertake and analyse research before performing the work, and (when applicable) what they expect to find (Nosek et al. 2018). Whilst more common in deductive (theory-testing) quantitative research, preregistration can be applied to any type of research including qualitative research (Haven & Van Grootel 2019) and modelling approaches (Crüwell & Evans 2019). In its basic form, for all types of research, preregistration should specify the study aims, type of data collection, tools used in the study and data analysis approach. In more detailed preregistrations, justification of these choices may also be included, e.g. why the selected method and analysis/framework are most appropriate to answer the relevant research question(s). For a quantitative approach, preregistration usually includes details on the key outcome measures and statistical analysis, including how missing data and outliers will be handled. Where applicable, concrete hypotheses are also listed. This preregistration, often called a pre-analysis plan (PAP), is put online with a certified time-stamp and registration number. It can either be immediately shared publicly or kept private until a later date (such as the publication of a paper).

Preregistration has three main benefits. First, it adds credibility to the results because researchers cannot be accused of QRP, such as changing their analyses and expectations afterwards to fit the data. As discussed, these practices give an impression of greater confidence in the results than is warranted, and allow the presenting of an exploratory finding as a confirmatory one (Wagenmakers & Dutilh 2016; Simmons et al. 2011).

Second, preregistration contributes to mitigating publication bias and file drawer problems. Academic publishing is biased towards publishing novel and statistically significant findings to a much greater extent than non-significant effects (Rosenthal 1979; Ferguson & Brannick 2012). If non-significant findings are not published, this reduces scientific efficiency since other researchers might repeat approaches that have been previously tried and failed. Whilst it is unrealistic to expect researchers to evaluate numerous study preregistrations and follow-up on unpublished results, a systematic review might do so. Furthermore, a researcher finding a null effect after having written and followed a PAP might be more likely to attempt publishing these findings. Registered reports, where a journal reviews the equivalent of a detailed PAP and provisionally commits to publishing a paper regardless of the results, show a greater frequency of null results than conventionally published papers (Warren 2018).

Third, preregistration brings direct benefits to the researcher (Wagenmakers & Dutilh 2016). It helps with planning study details and getting early input into research design and analysis (van’t Veer & Giner-Sorolla 2016). This will likely lead to better conducted studies and faster analysis after data collection. It also allows putting an early stake to the area one is working in and sends the message that the researcher is committed to research transparency. Whilst preregistration frontloads some of the work of analysis, time can be regained at the analysis stage because important decisions about what to include in the analysis have already been made (Wagenmakers & Dutilh 2016). Preregistration does not preclude more creative exploratory analysis on the data; it is only important that any deviations from the PAP are noted in publications (with a brief justification) and that non-prespecified findings are identified as such (Claesen et al. 2019; Frankenhuis & Nettle 2018).

In some disciplines, e.g. economics, planned analyses are very complex and preregistration documents might become unwieldy long if considering all possible options for nested hypotheses and analyses. In such cases, a simplified preregistration laying out the key aspects may be deemed sufficient (Olken 2015).

Table 1 overviews the preregistration forms for different research approaches, e.g. secondary data, qualitative data etc., as derived from the OSF (n.d. a).

Table 1: Overview of the preregistration forms for different research approaches

| Registration forms | Description | URL |

|---|---|---|

| OSF Prereg | Generic preregistration form | /osf.io/prereg/Opens in a new tab |

| AsPredicted Preregistration | Generic preregistration form | aspredicted.org/Opens in a new tab |

| Replication Recipe | For registering a replication study | osf.io/4jd46/Opens in a new tab |

| Qualitative Research Preregistration | For qualitative research | osf.io/j7ghv/wiki/home/Opens in a new tab |

| Secondary Data Preregistration | For preregistering a research project that uses an existing data set | osf.io/x4gzt/Opens in a new tab |

| Cognitive Modeling (Model Application) | For preregistering a study using a cognitive model as a measurement tool | osf.io/2qbkc/wiki/home/Opens in a new tab |

Some of those forms can be filled directly as guided workflows; others are stand-alone templates that then need to be uploaded to a repository.

The OSF and AsPredicted.org are used across disciplines; the AEA RegistryOpens in a new tab is primarily used in economics, EGAP in political scienceOpens in a new tab and ClinicalTrials.gov in medical sciences (opens in a new tab).

The latest point for preregistration should be before data analysis commences. However, best practice is to work on preregistration whilst designing the study to reap the benefits from it. When writing up the research, details of the PAP (e.g. hyperlink and registration number) are included in any output. Deviations from the prespecification should be noted and justified (e.g. Nicolson et al. 2017: 87).

2.2 Reporting guidelines

When reporting research, it can be difficult to decide which details are important to include. Missing out important details of the work creates several issues. It can make it hard for readers to judge how well-founded or generalisable the conclusions are and may make the work difficult or impossible for others to reproduce. Future evidence reviewers may have difficulty integrating the method and findings, limiting the extent to which work can contribute to evidence assessments.

To help address these problems, sets of reporting guidelines have been developed spelling out precisely which details need to be included for different kinds of study. This include questions such as sampling methods and how recruitment was undertaken, although these naturally vary between study types. Details of some prominent guidelines are provided in Table 2. Further options and a guide to which checklist to use are available at Equator Network (2016).

Table 2: Selected main reporting guidelines likely to be applicable to energy research

| Study type | Reporting guidelines | Notes | References |

|---|---|---|---|

| Randomised trial | CONSORT | Requires a flowchart of the phases of the trial and includes a 25-item checklist | Schulz et al. (2010) |

| Systematic review | PRISMA | Flowchart and 27-item checklist | Moher et al. (2009) |

| Predictive model | TRIPOD | 22-item checklist | Collins et al. (2015) |

| Qualitative study (interviews and focus groups) | COREQ | 32-item checklist | Tong et al. (2007) |

Reporting guidelines give added confidence that important details are being reported, and a way of justifying such choices (e.g. in response to peer-review comments). They can also make it quicker and easier to write up reports, drawing on the guidelines to help structure them. Guidelines are not only useful at the reporting stage. By becoming familiar early on with standard reporting requirements, researchers can ensure they are considering all the details they will need to report and make note of the important steps during research design and collection. Checklists can be used to explicitly structure reports or, at the very least, as a check to ensure that all relevant details are included somewhere. It is usual to cite the guidelines that are being followed.

Following reporting guidelines is, however, not always straightforward. They are often developed for a very specific purpose, such as medical randomised control trials. Even if researchers identify the most suitable type of guideline for their own work, the guidelines may still call for the reporting of irrelevant details. In such cases researchers could decide to follow them at their discretion—but it is better to consider and exclude points than risk missing out reporting on important details.

2.3 Preprints of papers

Scholarly communication largely revolves around the publication of articles in peer-reviewed journals. The peer review process, while imperfect (Smith 2006), fulfils the important role of performing an independent check on the rigour with which the work was conducted and the justifiability of the conclusions that are drawn. However, peer review and other stages of the academic publication process mean that substantial delays can be introduced between the results being prepared for reporting and publication.

This results in an extended period before potentially useful findings can be acted upon by both other researchers and those outside academia, especially where researchers are unable to pay for open access or journal subscriptions, including universities in developing countries (Suber 2013: 30). This is especially problematic in areas such as tackling the climate crisis, when rapid action based on the best available evidence is essential.

The response of the academic community to this has been the institution of preprints or pre-peer review versions of manuscripts that are made freely accessible. Preprints allow early access to, and scrutiny of, research findings, with no affordability constraints. They also allow authors to collect input from peers before (or in addition to) the peer-review process, with the potential to improve the quality of the reporting or interpretation.

However, because preprints are not peer reviewed, there are legitimate concerns that if preprints present findings based on erroneous methods, this could be dangerously misleading for users (Sheldon 2018). Authors may similarly be worried about putting their work out for wider scrutiny without the independent check that peer review provides (Chiarelli et al. 2019). Preprints are (or at least should be) clearly labelled as such, and a ‘buyer beware’ approach taken on behalf of readers. Indeed, peer review does not obviate the need for critical use. An international qualitative study with key stakeholders such as universities, researchers, funders and preprint server providers showed that the concern exists that that preprints may be considered by some journals as a ‘prior publication’, making it harder to publish the work (Chiarelli et al. 2019). While it is always important to check, almost all quality journals now explicitly permit preprints. Finally, it is important to set any concerns against the benefits brought by speedier publication described above, especially for those who cannot pay to publish or access research outputs.

Publishing a preprint is as easy as selecting a preprint server and uploading a manuscript at any point before ‘official’ publication (most commonly around the point of submission to a journal). A range of such servers exist, probably the most well known of which is arXiv.org, a preprint server for the physical sciences. Table 3 overviews the 10 most common international preprint servers as listed on the OSF (n.d. b) with the addition of medRxiv which was identified as another particularly popular preprint server (Hoy 2020). The OSF preprint server as such provides a single search interface to access a growing number of preprint sources with more than 2 million preprints accessible, but it also allows the uploading of new preprints.

Table 3: Overview of the common preprint servers

| Name | Homepage | Topic areas |

|---|---|---|

| OSF Preprints | osf.io/preprints/Opens in a new tab | Any (plus serves as a search interface for several preprint servers) |

| arXiv | arxiv.org/Opens in a new tab | Physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, economics |

| bioRxiv | www.biorxiv.org/Opens in a new tab | Biology |

| Preprints.org | www.preprints.org/Opens in a new tab | Any |

| PsyArXiv | psyarxiv.com/Opens in a new tab | Psychological sciences |

| RePEc | www.repec.org/Opens in a new tab | Economics and related sciences |

| SocArXiv | osf.io/preprints/socarxivOpens in a new tab | Social sciences |

| EarthArXiv | eartharxiv.org/Opens in a new tab | Earth science and related domains of planetary science |

| engrXiv | engrxiv.org/Opens in a new tab | Engineering |

| LawArXiv | lawarxiv.info/Opens in a new tab | Legal scholarship |

| MedRxiv | www.medrxiv.org/Opens in a new tab | Health Sciences |

It is also possible to share preprints through institutional repositories and more general scientific repositories such as Figshare. Preprint servers will usually assign a digital object identifier (DOI), and preprints will therefore show up in, for example, Google Scholar searches. Because preprints are assigned a DOI, it is not possible to delete them once they have been uploaded. However, revised versions can be uploaded, and once the paper is published in a peer-reviewed version, this version can be linked to the preprint.

2.4 Open data and open code

Open data means to make data collected in a research study available to others, usually in an online repository. Open data must be freely available online and in a format that allows other researchers to reuse the data (Huston et al. 2019). Open code is equivalently making the computer (or qualitative analysis) code that was used to analyse certain data publicly available. Both fall under the umbrella term of ‘open science’ (Kjærgaard et al. 2020; Pfenninger et al. 2017).

Open data and open code can allow the identification of errors (both intentional and unintentional) and allow exact replication, i.e. the rerunning of analyses. The real strength of the publication of data sets and computer code is in combination with PAPs: without prespecification of the data collection, cleaning and analysis processes, uploaded data could still be manipulated, by, for example, omitting certain variables or deleting outliers. Together with PAPs, cherry-picking of the results and other forms of p-hacking could be uncovered.

Open data and open code can increase productivity and collaborations (Pfenninger et al. 2017), as well as the visibility and potential impact of research. An analysis of more than 10,000 studies in the life sciences found that studies with the underlying data available in public repositories received 9% more citations than similar studies for which data were not available (Piwowar & Vision 2013). Research has also indicated that sharing data leads to more research outputs using the data set than when the data set is not shared (Pienta et al. 2010). Open code is also associated with studies being more likely to be cited than those that do not share their code: in a study of all papers published in IEEE Transactions on Image Processes between 2004 and 2006, the median number of citations for papers with code available online was three times higher than those without (Vandewalle 2012). Open data can also be used for other purposes, such as education and training. Sharing data and code is most common in quantitative research, but is possible and encouraged in qualitative research (McGrath & Nilsonne 2018).

This increased usage and visibility through open code and open data can advance science further and more quickly, and also brings personal benefits to a researcher, given the importance of scientific citations and impact measures. The drive to publish in competitive academic environments may play a role in discouraging open-data practices: data collection can be a long and expensive process and researchers might fear premature data-sharing may deprive them from the rewards of their effort, including scientific prestige and publication opportunities (Mueller-Langer & Andreoli-Versbach 2018). Funders increasingly view research data as a public good that should be shared as openly as possible, with possible exemptions connected with ethical, commercial, etc. considerations (e.g. see the UKRI n.d. principles on research data). The UKRI principles also specifically acknowledge that a period of privileged (closed) access may be appropriate. While any such period will be largely up to researchers to determine and defend, European Commission (2016b) guidelines suggest that researchers should follow the principle ‘as open as possible, as closed as necessary’.

Making data and code publicly available does entail additional time and effort as certain steps have to be taken. For open code, this is mainly around ensuring that the code shared is sufficiently clear and well documented to allow others to follow the steps taken, although this should be good practice anyway. Publishing code might also encourage scientists to improve their coding abilities (Easterbrook 2014). Data need to be anonymised before publication. However, many data sets are already collected anonymously, e.g. survey data using an online platform need to be anonymised to comply with the European Union’s General Data Protection Regulation (GDPR), hence the additional time commitment is often minimal. For personal data, such as smart meter data, a solution might be to only allow access via a secure research portal. A particular challenge for energy research is that projects are often done with an external partner, e.g. a utility company which might oppose the publication of data sets for competitive reasons. In the ideal case, this would be addressed before commencing any research and an agreement reached on which data (if not the total data set) could be shared (e.g. Nicolson et al. 2017). For video and audio recordings, data need to be transcribed, removing all personally identifiable features, and/or the sound of the audio-source modified to avoid recognisability (Pätzold 2005). Again, this takes resources but might be required even for institutional storage, and plans for data sharing must anyway be shared in informed consent procedures. Where applicable, it is advisable to publish exact copies of survey and interview questions alongside code and data.

The webpage re3data.org lists more than 2000 research data repositories that cover data from different academic disciplines; however, this includes institutional webpages which cannot be accessed by everyone. It is also possible to publish data as a manuscript such as in Nature Scientific Data (e.g. for an example of a special collection on energy related data, see Huebner & Mahdavi 2019). Similarly, a range of platforms exist for hosting and sharing code, such as githubOpens in a new tab. Some repositories are suitable for a range of uses under one project, such as the OSF which allows preregistration of studies, depositing of data and code. OpenEI is a data repository specifically for energy-related data sets; whilst providing easy access, it does not generate a DOI.

When preparing data and code for sharing, the confidentiality of research participants must be safeguarded, i.e. all data need to be de-identified (for further details, see UK Data Service n.d.).

The FAIR principles state that data should be (Wilkinson et al. 2016):

- findable: metadata and data should be easy to find for both humans and computers. machine-readable metadata are essential for automatic discovery of data sets and services

- accessible: once the user finds the required data set, they must be able to actually access it, possibly including authentication and authorisation

- interoperable: the data usually need to be integrated with other data; in addition, they need to interoperate with as wide as possible a variety of applications or workflows for analysis, storage and processing

- reusable: to be able to reuse data, metadata and data should be well described so that they can be replicated and/or combined in different settings

These principles have been widely endorsed, including by G20 leaders (European Commission 2016a) and the European Commission (2018). In 2018, the European Commission launched the European Open Science Cloud (EOSC), an initiative to create a culture of open research data that are detectable, accessible, interoperable and reusable (FAIR) (Budroni et al. 2019).

3. Practical suggestions on tool implementation

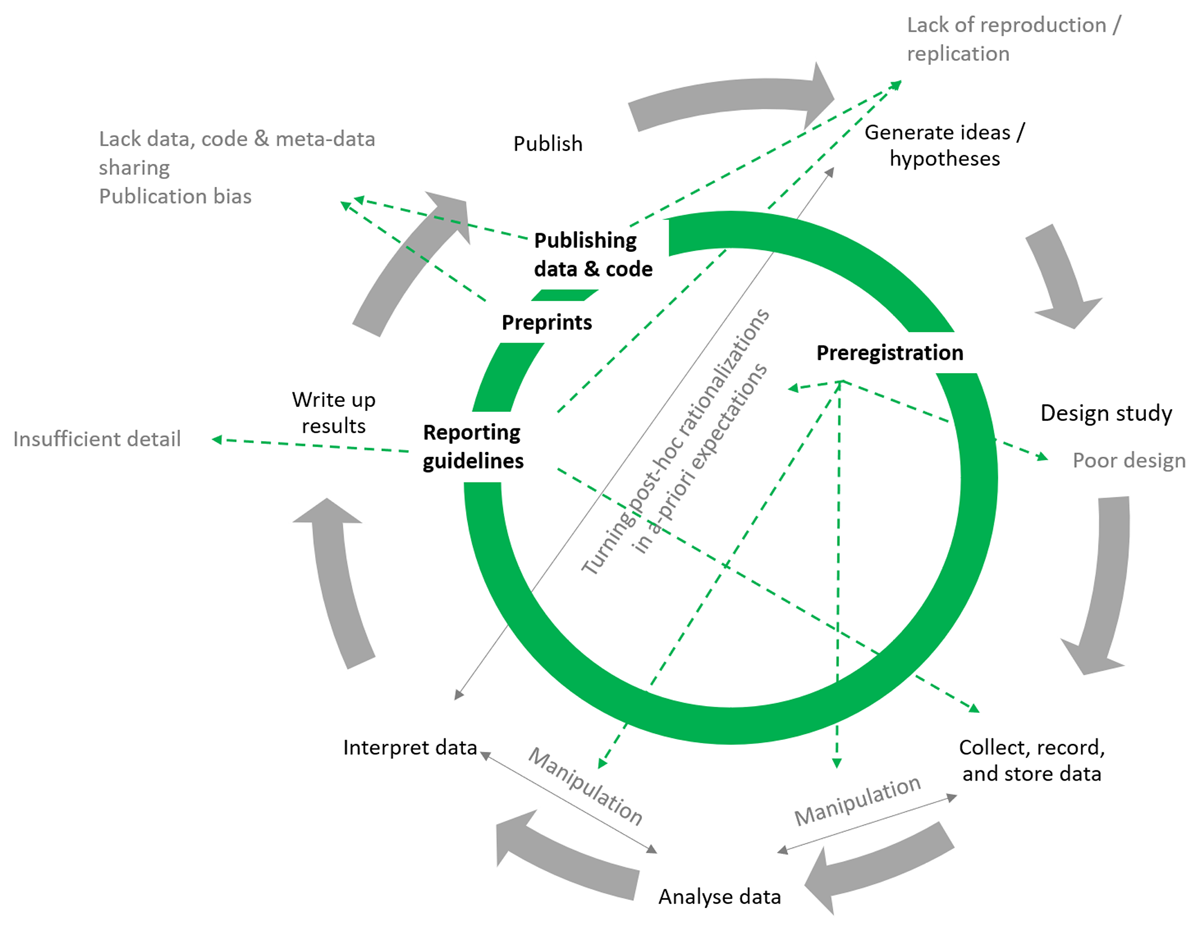

In summary, this paper has suggested four tools to be used by energy researchers to create transparent, reproducible and quality research. Figure 2 shows where in the research process the tools should be deployed and how they help to overcome problems within the scientific process.

Preregistration happens early in the research process but has impacts for many subsequent stages. It helps to identify problems in the study design when planning how to conduct and analyse the study and can hence improve poor design. It also mitigates issues around the manipulation of data. Through stating what outcomes are expected, preregistration reduces the likelihood of turning post-hoc rationalisations into a priori expectations.

Reporting guidelines are used when the results are written up and reduce the problem of insufficient reporting of study details. When considered early on, they help to collect, record and store data in a suitable manner. They also make it easier to conduct reproduction/replication studies by providing necessary information.

Preprints and data and code-sharing happen towards the end of the research process. They can happen before publication or together with publication, and in some cases even after publication (e.g. if keeping an embargo on data). Preprints contribute to mitigating the publication bias, i.e. negative findings which tend to be published in journals to a lesser extent, can always find a home in preprints and hence a citable, traceable and indexed record of a study irrespective of outcome can be created. Data, code and metadata-sharing helps to overcome issues around the lack of sharing of these items and allow the results to be checked, expanded and synthesised. They make it easier to run reproduction/replication studies by providing crucial information for those.

The simple checklist in Table 4 suggests how researchers could report on the tools employed in their research in academic publications. Nature ran a survey to evaluate the effectiveness of its checklist on key factors around reproducibility that authors who submit to Nature need to complete. A total of 49% of respondents felt the checklist had helped to improve the quality of published research (Nature 2018). The proportion of authors of papers in Nature journals who state explicitly whether they have carried out blinding, randomisation and sample size calculations has increased after implementation of the checklist (Han et al. 2017; Macleod 2017). Whilst a journal-mandated checklist is almost certainly more effective than a voluntary suggestion as below, it is worth noting that 58% of survey respondents indicated that researchers have the greatest capacity to improve the reproducibility of published work (Nature 2018).

Table 4: Checklist for the reporting of tools that promote transparency, reproducibility and quality (TReQ) of research

| Tools | Delete as applicable | Comments |

|---|---|---|

| Preregistration | ||

| This study has a pre-analysis plan | Yes No |

Explanation if no: |

| If yes | ||

| URL | {insert link} | |

| Was it registered before data collection? | Yes No |

Explanation if no: |

| Does the paper mention and explain deviations from the PAP? | Yes No Not applicable (no deviations) |

If yes, specify section of paper, or explanation if no: |

| Reporting guidelines | ||

| This paper follows a reporting guideline | Yes No |

|

| If yes | ||

| Which one? | {insert name and citation, include in reference list} | |

| Open data and code | ||

| Data/code are publicly available | Yes, data and code Yes, data only Yes, code only No |

|

| Does the paper make a statement on data and code availability? | Yes, on data and code Yes, on data only Yes, on code only No |

Refer to relevant section or include here: |

| If yes | ||

| What is/are the link(s)? | {insert link(s)} | |

| Have steps been taken to ensure the data are FAIR? | Yes No |

|

| Have metadata been uploaded? | Yes No |

|

| Preprints | ||

| Have you uploaded a preprint? | Yes Planned following submission No |

|

| If yes | ||

| What is the link? | {insert link} | |

| If planned | ||

| Which preprint server/location? | ||

Researchers could attach a filled template to their paper as an appendix (for a stand-alone document that could be downloaded and used by readers, see the supplemental data online). This would (1) aid others in judging the extent to which the work used good practices related to transparency; (2) aid others in finding all additional resources easily; and (3) help author(s) to check to what extent they have followed good research practices and what additional actions they could take.

4. Discussion and conclusions

In this paper, four easy-to-use, widely applicable tools are suggested to improve the transparency, reproducibility and quality (TReQ) of research. Information and guidance are provided on pre-analysis plans (PAPs), reporting guidelines, open data and code, and preprints, and a checklist is presented that researchers could use to show how they have made use of these tools. Almost all energy researchers could easily (and should) now integrate all or most of these tools, or at least consider and justify not doing so. As argued here, this should result in a range of benefits for researchers and research users alike.

These four only reflect a selection of possible tools. These tools were chosen to be as widely applicable as possible so that researchers using different methods could apply them. Other approaches are also worthy of wider uptake. For example, a little-discussed but important issue concerns the quality of literature reviews, both when undertaken as part of the foundation of any normal research project or as a method in its own right. Missing or misrepresenting work again risks needless duplication of research or the promulgation of incorrect interpretations of previous work (Phelps 2018). A solution is to employ more systematic approaches that employ structured methods to minimise bias and omissions (Grant & Booth 2009). However, systematic reviews were not covered here because the main TReQ aspects of such reviews (such as reporting of search strategies, inclusion criteria, etc.) are not usually found in the background literature review sections of standard articles—rather they are restricted to review articles where the review is the research method. Approaches to improving the quality of literature review sections have been proposed—such as cumulative literature reviews which can be reused (Vaganay 2018) or employment of automated approaches (Marshall & Wallace 2019)—but are beyond the scope of this paper. Similarly, some researchers, such those working with a large quantitative data set, might consider simulating data on which to set up and test an analysis before deploying it on the real data.

It should also be stressed that there are several other practices that whilst not necessarily directly contributing to TReQ are crucial for good research. These include ethics, accurate acknowledgements of the contribution of other researchers, being open about conflict of interests, and following the legal obligations of employers. Most universities provide details and policies on academic conduct that is expected from all staff (National Academy of Sciences et al. 1992). Crucially, all these approaches (including TReQ) are important conditions for research quality, but they are not on their own sufficient conditions. For example, how findings from a study translate to other times, situations and people, i.e. its external validity, will strongly depend on study design and method (for how to improve methods and research design, see, e.g. Sovacool et al. 2018).

Another important point to consider is how to ensure that the tools are not simply deployed as ‘window dressing’ and misused to disguise ongoing questionable research practices (QRP) under a veneer of transparency. For example, an analysis of PAPs showed that a significant proportion failed to specify necessary information, e.g. on covariates or outlier correction, and that corresponding manuscripts did not report all prespecified analyses (Ofosu & Posner 2019).

To ensure that both open data and open code are usable for others, detailed documentation needs to be provided. Standards for meta-documentation should be considered (e.g. Day 2005, Center for Government Excellence 2016). It has been shown that poor quality data are prevalent in large databases and on the web (Saha & Srivastava 2014). Regarding reporting guidelines, a potential issue arises from their relatively unfamiliarity to both authors and reviewers. Hence, there is the risk that they are only partially or poorly implemented and that reviewers will have difficulty evaluating them. Even in the medical field, in which reporting guidelines are widely used, research showed that only half of reviewer instructions mentioned reporting guidelines, and not necessarily in great detail (Hirst & Altman 2012).

Finally, while this paper attempts to convince individual readers of the benefits of adopting these tools, the authors acknowledge that widespread use is unlikely to come about without more structural adjustments to the energy research ecosystem. This is needed in several areas. Tools for better research practices should become a standard part of (at least) postgraduate education programmes and be encouraged by thesis supervisors. Journals, funders, academic and other research institutions, and regulatory bodies have an important role to play in increasing knowledge and usage. This could involve approaches such as signposting reporting guidelines (Simera et al. 2010) and working with editors and peer reviewers to increase the awareness of the need for such approaches. Further examples include partnerships between funders and journals, with funders offering resources to carry out research accepted by the journal as a registered report (Nosek 2020).

References

- AECOM. (2012). Investigation into overheating in homes. Literature review. AECOM with UCL and London School of Hygiene and Tropical Medicine.

- Anvari, F., & Lakens, D. (2018). The replicability crisis and public trust in psychological science. Comprehensive Results in Social Psychology, 3(3), 266–286. doi: 10.1080/23743603.2019.1684822

- Baker, M., & Penny, D. (2016). Is there a reproducibility crisis? Nature, 533(May 26), 452–454. doi: 10.1038/533452a

- Ball, C. (2009). What is transparency? Public Integrity, 11(4), 293–308. doi: 10.2753/PIN1099-9922110400

- Banks, G.C., O’Boyle, E.H., Pollack, J.M., White, C.D., Batchelor, J.H., Whelpley, C.E., … Adkins, C.L. (2016a). Questions about questionable research practices in the field of management: A Guest commentary. Journal of Management, 42(1), 5–20. doi: 10.1177/0149206315619011

- Banks, G.C., Rogelberg, S.G., Woznyj, H.M., Landis, R.S., & Rupp, D.E. (2016b). Editorial: Evidence on questionable research practices: The good, the bad, and the ugly. Journal of Business and Psychology, 31, 323–338. doi: 10.1007/s10869-016-9456-7

- Barba, L.A. (2018). Terminologies for reproducible research. ArXiv.

- Begley, C.G., & Ellis, L.M. (2012). Drug development: Raise standards for preclinical cancer research. Nature, 483(7391), 531–533. doi: 10.1038/483531a

- Belcher, B.M., Rasmussen, K.E., Kemshaw, M.R., & Zornes, D.A. (2016). Defining and assessing research quality in a transdisciplinary context. Research Evaluation, 25(1), 1–17. doi: 10.1093/reseval/rvv025

- Budroni, P., Claude-Burgelman, J., & Schouppe, M. (2019). Architectures of knowledge: The European Open Science Cloud. ABI Technik, 39(2), 130–141. doi: 10.1515/abitech-2019-2006

- Button, K.S., Ioannidis, J.P.A., Mokrysz, C., Nosek, B.A., Flint, J., Robinson, E.S.J., & Munafò, M.R. (2013). Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14(5), 365–376. doi: 10.1038/nrn3475

- Center for Government Excellence. (2016). Open data—Metadata guideOpens in a new tab

- Chambers, C.D., Feredoes, E., Muthukumaraswamy, S.D., & Etchells, P.J. (2014). Instead of ‘playing the game’ it is time to change the rules: Registered reports at AIMS Neuroscience and beyond. AIMS Neuroscience, 1, 4–17. doi: 10.3934/Neuroscience.2014.1.4

- Chiarelli, A., Johnson, R., Pinfield, S., & Richens, E. (2019). Preprints and scholarly communication: An exploratory qualitative study of adoption, practices, drivers and barriers. F1000Research, 8, 971. doi: 10.12688/f1000research.19619.2

- Claesen, A., Gomes, S.L.B.T., Tuerlinckx, F., & Vanpaemel, W. (2019). Preregistration: Comparing dream to reality. PsyarXiv. doi: 10.31234/osf.io/d8wex

- Collins, G.S., Reitsma, J.B., Altman, D.G., & Moons, K.G.M. (2015). Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ, 350. doi: 10.1136/bmj.g7594

- Crüwell, S., & Evans, N.J. (2019). Preregistration in complex contexts: A preregistration template for the application of cognitive models. PsyArXiv. doi: 10.31234/osf.io/2hykx

- Day, M. (2005). DCC digital curation manual instalment on metadata (November), 41.

- Dickersin, K., Chan, S., Chalmers, T.C., Sacks, H.S., & Smith, H. (1987). Publication bias and clinical trials. Controlled Clinical Trials, 8(4), 343–353. doi: 10.1016/0197-2456(87)90155-3

- DuBois, J.M., Strait, M., & Walsh, H. (2018). Is it time to share qualitative research data? Qualitative Psychology, 5(3), 380–393. doi: 10.1037/qup0000076

- Easterbrook, S.M. (2014). Open code for open science? Nature Geoscience, 7, 779–781. doi: 10.1038/ngeo2283

- Eisner, D.A. (2018). Reproducibility of science: Fraud, impact factors and carelessness. Journal of Molecular and Cellular Cardiology, 114, 364–368. doi: 10.1016/j.yjmcc.2017.10.009

- Energy Research & Social Science. (n.d.). Homepage.

- Equator Network. (2016). EQUATOR reporting guideline decision tree: Which guidelines are relevant to my work?

- European Commission. (2016a). G20 leaders’ communique Hangzhou Summit.

- European Commission. (2016b). Guidelines on fair data management in Horizon 2020 (December 6).

- European Commission. (2018). Turning FAIR into Reality 2018: Final report and action plan from the European Commission Expert Group on FAIR Data.

- European Commission. (n.d.). Clean energy for all Europeans package|Energy.

- Eysenbach, G. (2006). The open access advantage. Journal of Medical Internet Research, 8(2), e8. doi: 10.2196/jmir.8.2.e8

- Fell, M.J. (2019). The economic impacts of open science: A rapid evidence assessment. Publications, 7, 46. doi: 10.3390/publications7030046

- Ferguson, C.J., & Brannick, M.T. (2012). Publication bias in psychological science: Prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychological Methods, 17(1), 120–128. doi: 10.1037/a0024445

- Frankenhuis, W.E., & Nettle, D. (2018). Open science is liberating and can foster creativity. Perspectives on Psychological Science, 13(4), 439–447. doi: 10.1177/1745691618767878

- George, S.L. (2018). Research misconduct and data fraud in clinical trials: Prevalence and causal factors. Getting to Good: Research Integrity in the Biomedical Sciences, 21, 421–428. doi: 10.1007/s10147-015-0887-3

- Grant, M.J., & Booth, A. (2009). A typology of reviews: An analysis of 14 review types and associated methodologies. Health Information and Libraries Journal, 26, 91–108. doi: 10.1111/j.1471-1842.2009.00848.x

- Han, S.H., Olonisakin, T.F., Pribis, J.P., Zupetic, J., Yoon, J.H., Holleran, K.M., … Lee, J.S. (2017). A checklist is associated with increased quality of reporting preclinical biomedical research: A systematic review. PLoS ONE, 12(9), e0183591. doi: 10.1371/journal.pone.0183591

- Hardwicke, T.E., Wallach, J.D., Kidwell, M.C., Bendixen, T., Crüwell, S., & Ioannidis, J.P.A. (2020). An empirical assessment of transparency and reproducibility-related research practices in the social sciences (2014–2017). Royal Society Open Science, 7(2), 190806. doi: 10.1098/rsos.190806

- Hirst, A., & Altman, D.G. (2012). Are peer reviewers encouraged to use reporting guidelines? A survey of 116 health research journals. PLoS ONE, 7. doi: 10.1371/journal.pone.0035621

- HM Government. (2017). The Clean Growth Strategy: Leading the way to a low carbon future.

- Hoy, M.B. (2020). Rise of the Rxivs: How preprint servers are changing the publishing process. Medical Reference Services Quarterly, 39(1), 84–89. doi: 10.1080/02763869.2020.1704597

- Huebner, G.M. & Mahdavi, A. (2019). A structured open data collection on occupant behaviour in buildings. Scientific Data, 6(1), 292. doi: 10.1038/s41597-019-0276-2

- Huebner, G.M., Nicolson, M.L., Fell, M.J., Kennard, H., Elam, S., Hanmer, C., … Shipworth, D. (2017). Are we heading towards a replicability crisis in energy efficiency research? A toolkit for improving the quality, transparency and replicability of energy efficiency impact evaluations. UCL Discovery, May, 1871–1880.

- Huston, P., Edge, V., & Bernier, E. (2019). Reaping the benefits of open data in public health. Canada Communicable Disease Report, 45(10), 252–256. doi: 10.14745/ccdr.v45i10a01

- IEA. (n.d.). Global EV Outlook 2019: Scaling up the transition to electric mobility. International Energy Agency (IEA).

- John, L.K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524–532. doi: 10.1177/0956797611430953

- Kerr, N.L. (1998). HARKing: Hypothesizing after the results are known. Personality and Social Psychology Review, 2(3), 196–217. doi: 10.1207/s15327957pspr0203_4

- Kjærgaard, M.B., Ardakanian, O., Carlucci, S., Dong, B., Firth, S. K., Gao, N., … Zhu, Y. (2020). Current practices and infrastructure for open data based research on occupant-centric design and operation of buildings. Building and Environment, 177, 106848. doi: 10.1016/j.buildenv.2020.106848

- Haven, T., & Van Grootel, D.L. (2019). Preregistering qualitative research. Accountability in Research, 26(3), 229–244. doi: 10.1080/08989621.2019.1580147

- Love, J., & Cooper, A.C. (2015). From social and technical to socio-technical: Designing integrated research on domestic energy use. Indoor and Built Environment, 24(7), 986–998. doi: 10.1177/1420326X15601722

- Macleod, M.R. (2017). Findings of a retrospective, controlled cohort study of the impact of a change in Nature journals’ editorial policy for life sciences research on the completeness of reporting study design and execution. BioRxiv, 187245. doi: 10.1101/187245

- Marshall, I.J., & Wallace, B.C. (2019). Toward systematic review automation: A practical guide to using machine learning tools in research synthesis. Systematic Reviews, 8, 163. doi: 10.1186/s13643-019-1074-9

- Mavrogianni, A., Davies, M., Taylor, J., Chalabi, Z., Biddulph, P., Oikonomou, E., … Jones, B. (2014). The impact of occupancy patterns, occupant-controlled ventilation and shading on indoor overheating risk in domestic environments. Building and Environment, 78, 183–198. doi: 10.1016/j.buildenv.2014.04.008

- McGrath, C., & Nilsonne, G. (2018). Data sharing in qualitative research: Opportunities and concerns. MedEdPublish, 7(4). doi: 10.15694/mep.2018.0000255.1

- Miguel, E., Camerer, C., Casey, K., Cohen, J., Esterling, K.M., Gerber, A., … Van der Laan, M. (2014). Promoting transparency in social science research. Science, 343(6166), 30–31. doi: 10.1126/science.1245317

- Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ (Online), 339, 332–336. doi: 10.1136/bmj.b2535

- Moravcsik, A. (2014). Transparency: The revolution in qualitative research. PS—Political Science and Politics, 47(1), 48–53. doi: 10.1017/S1049096513001789

- MRC. (n.d.). Good research practice. Medical Research Council (MRC).

- Mueller-Langer, F., & Andreoli-Versbach, P. (2018). Open access to research data: Strategic delay and the ambiguous welfare effects of mandatory data disclosure. Information Economics and Policy, 42, 20–34. doi: 10.1016/j.infoecopol.2017.05.004

- Munafò, M.R., Nosek, B.A., Bishop, D.V.M., Button, K.S., Chambers, C.D., Percie du Sert, N., … Ioannidis, J.P.A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1(1), 0021. doi: 10.1038/s41562-016-0021

- National Academies of Sciences, Engineering, and Medicine. (2019). Reproducibility and replicability in science. National Academies Press. doi: 10.17226/25303

- National Academy of Sciences, National Academy of Engineering, & Institute of Medicine. (1992). Responsible science: Ensuring the integrity of the research process: Volume I. National Academies Press. doi: 10.17226/1864

- National Center for Dissemination of Disability. (2005). What are the standards for quality research? (Technical Brief No. 9).

- Nature. (2016). Reality check on reproducibility. Nature, 533(May 25), 437. doi: 10.1038/533437a

- Nature. (2018). Checklists work to improve science editorial. Nature, 556(April 19), 273–274. doi: 10.1038/d41586-018-04590-7

- Nicolson, M., Huebner, G.M., Shipworth, D., & Elam, S. (2017). Tailored emails prompt electric vehicle owners to engage with tariff switching information. Nature Energy, 2(6), 1–6. doi: 10.1038/nenergy.2017.73

- Nosek, B. (2020). Changing a research culture toward openness and reproducibility. OSF. doi: 10.17605/OSF.IO/ZVP8K

- Nosek, B.A., Ebersole, C.R., DeHaven, A.C., & Mellor, D.T. (2018). The preregistration revolution. Proceedings of the National Academy of Sciences, USA, 115(11), 2600–2606. doi: 10.1073/pnas.1708274114

- Ofgem. (n.d.). Vulnerable customers & energy efficiency.

- Ofosu, G.K., & Posner, D.N. (2019). Pre-analysis plans: A stocktaking. doi: 10.31222/osf.io/e4pum

- Olken, B.A. (2015). Promises and perils of pre-analysis plans. Journal of Economic Perspectives, 29(3), 61–80. doi: 10.1257/jep.29.3.61

- Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716. doi: 10.1126/science.aac4716

- OSF. (n.d. a). OSF|Templates of OSF Registration Forms Wiki. Open Science Framework (OSF).

- OSF. (n.d. b). OSF Preprints|Search. Open Science Framework (OSF).

- Park, C.L. (2004). What is the value of replicating other studies? Research Evaluation, 13(3), 189–195. doi: 10.3152/147154404781776400

- Pätzold, H. (2005). Secondary analysis of audio data. Technical procedures for virtual anonymization and pseudonymization. Forum Qualitative Sozialforschung, 6(1). doi: 10.17169/fqs-6.1.512

- Pfenninger, S., DeCarolis, J., Hirth, L., Quoilin, S., & Staffell, I. (2017). The importance of open data and software: Is energy research lagging behind? Energy Policy, 101, 211–215. doi: 10.1016/j.enpol.2016.11.046/li>

- Pfenninger, S., Hirth, L., Schlecht, I., Schmid, E., Wiese, F., Brown, T., … Wingenbach, C. (2018). Opening the black box of energy modelling: Strategies and lessons learned. Energy Strategy Reviews, 19, 63–71. doi: 10.1016/j.esr.2017.12.002

- Phelps, R. P. (2018). To save the research literature, get rid of the literature review. Impact of Social Sciences.

- Pienta, A., Alter, G., & Lyle, J. (2010). The enduring value of social science research: The use and reuse of primary research data. Journal of the Bertrand Russell Archives, 71(20), 7763–7772.

- Piwowar, H., Priem, J., Larivière, V., Alperin, J.P., Matthias, L., Norlander, B., … Haustein, S. (2018). The state of OA: A large-scale analysis of the prevalence and impact of Open Access articles. PeerJ, 2018(2). doi: 10.7717/peerj.4375

- Piwowar, H. A., & Vision, T. J. (2013). Data reuse and the open data citation advantage. PeerJ, 2013(1), e175. doi: 10.7717/peerj.175

- Prinz, F., Schlange, T., & Asadullah, K. (2011). Believe it or not: How much can we rely on published data on potential drug targets? Nature Reviews Drug Discovery, 10, 712–713. doi: 10.1038/nrd3439-c1

- Rosenthal, R. (1979). The file drawer problem and tolerance for null results. Psychological Bulletin, 86(3), 638–641. doi: 10.1037/0033-2909.86.3.638

- Saha, B., & Srivastava, D. (2014). Data quality: The other face of big data. Proceedings of the International Conference on Data Engineering, 1294–1297. doi: 10.1109/ICDE.2014.6816764

- Schloss, P.D. (2018). Identifying and overcoming threats to reproducibility, replicability, robustness, and generalizability in microbiome research. MBio, 9(3). doi: 10.1128/mBio.00525-18

- Schulz, K.F., Altman, D.G., & Moher, D. (2010). CONSORT 2010 Statement: Updated guidelines for reporting parallel group randomised trials. BMJ (Online), 340(7748), 698–702. doi: 10.1136/bmj.c332

- Sheldon, T. (2018). Preprints could promote confusion and distortion. Nature, 559, 445. doi: 10.1038/d41586-018-05789-4

- Simera, I., Moher, D., Hirst, A., Hoey, J., Schulz, K.F., & Altman, D.G. (2010). Transparent and accurate reporting increases reliability, utility, and impact of your research: Reporting guidelines and the EQUATOR Network. BMC Medicine, 8, 24. doi: 10.1186/1741-7015-8-24

- Simmons, J.P., Nelson, L.D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. doi: 10.1177/0956797611417632

- Smith, R. (2006). Peer review: A flawed process at the heart of science and journals. Journal of the Royal Society of Medicine, 99, 178–182. doi: 10.1258/jrsm.99.4.178

- Sovacool, B.K. (2014). What are we doing here? Analyzing fifteen years of energy scholarship and proposing a social science research agenda. Energy Research and Social Science, 1, 1–29. doi: 10.1016/j.erss.2014.02.003

- Sovacool, B.K., Axsen, J., & Sorrell, S. (2018). Promoting novelty, rigor, and style in energy social science: Towards codes of practice for appropriate methods and research design. Energy Research and Social Science, 45, 12–42. doi: 10.1016/j.erss.2018.07.007

- Stroebe, W., Postmes, T., & Spears, R. (2012). Scientific misconduct and the myth of self-correction in science. Perspectives on Psychological Science: Journal of the Association for Psychological Science, 7(6), 670–688. doi: 10.1177/1745691612460687

- Suber, P. (2013). Open Access. MIT Press. doi: 10.7551/mitpress/9286.001.0001

- Tennant, J.P., Waldner, F., Jacques, D.C., Masuzzo, P., Collister, L.B., & Hartgerink, C.H.J. (2016). The academic, economic and societal impacts of Open Access: An evidence-based review. F1000Research, 5, 632. doi: 10.12688/f1000research.8460.3

- Tong, A., Sainsbury, P., & Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care, 19(6), 349–357. doi: 10.1093/intqhc/mzm042

- UK Data Service. (n.d.). Anonymisation.

- UKCCIS. (2015). What is good quality research? UKCCIS Evidence Group, 151(July), 10–17. doi: 10.1145/3132847.3132886

- UKRI. (n.d.). Making research data open. UK Research and Innovation (UKRI).

- University of Cambridge. (n.d.). Good research practice|Research integrity.

- Vaganay, A. (2018). To save the research literature, let’s make literature reviews reproducible|Impact of Social Sciences.

- van ’t Veer, A. E., & Giner-Sorolla, R. (2016). Pre-registration in social psychology—A discussion and suggested template. Journal of Experimental Social Psychology, 67, 2–12. doi: 10.1016/j.jesp.2016.03.004

- Van den Eynden, V., Corti, L., Woollard, M., Bishop, L., & Horton, L. (2009). Managing and sharing data best practice for researchers.

- Vandewalle, P. (2012). Code sharing is associated with research impact in image processing. Computing in Science and Engineering, 14, 42–47. doi: 10.1109/MCSE.2012.63

- Wagenmakers, E.-J., & Dutilh, G. (2016). Seven selfish reasons for preregistration. APS Observer, 29(9), 13–14.

- Warren, M. (2018). First analysis of ‘pre-registered’ studies shows sharp rise in null findings. Nature. doi: 10.1038/d41586-018-07118-1

- Wells, J.A., & Titus, S. (2006). The Gallup Organization for final report: Observing and reporting suspected misconduct in biomedical research.

- Wilkinson, M.D., Dumontier, M., Aalbersberg, Ij. J., Appleton, G., Axton, M., Baak, A., … Mons, B. (2016). Comment: The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3. doi: 10.1038/sdata.2016.18

Figure 1: Map of the scientific process and points and potential issues. The elements in a study are circular, beginning with 1 Generate ideas or hypothesis which can have issues of a lack of reproduction or replication, moving to 2 Design study – at this point, poor study design can be an issue. Next step is 3 Collect, record and store data, followed by 4 Analyse data, then 5 Interpret data. These three elements can be adversely affected by data manipulation. Next step is 6 Write up results, which together with steps 3,4 and 5 can be affected by insufficient detail. Step 7 is to publish, where publication bias, lack of data, code and metadata sharing can all be issues. Steps 1 and 5 can also be affected by turning post-hoc rationalisations in a-priori expectations.

Figure 2: Building on the map in Figure 1, Preregistration can help to avoid poor study design, data manipulation and turning post-hoc rationalisations in a-priori expectations. Reporting guidelines improve collection, recording and storage of data, writing up results, and a lack of reproduction or replication. Preprints help to avoid publication bias and a lack of data, code and metadata sharing, while Publishing data and code avoids a lack of reproduction or replication as well as avoiding publication bias and a lack of data, code and metadata sharing.

Publication details

Huebner, G.M., Fell, M.J. & Watson, N.E. 2021. Improving energy research practices: guidance for transparency, reproducibility and quality. Buildings and Cities, 2(1): 1–20. doi: 10.5334/bc.67Opens in a new tabOpen access

Banner photo credit: Alireza Attari on Unsplash